Menu

|

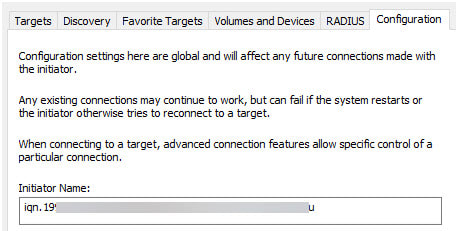

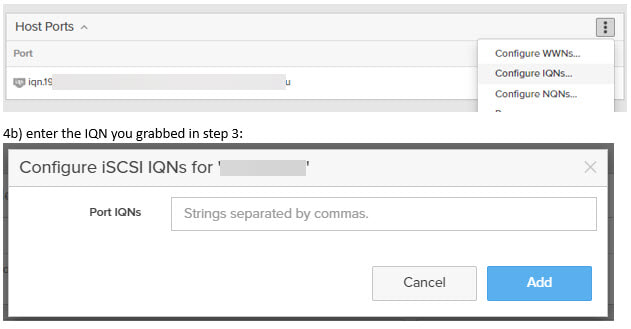

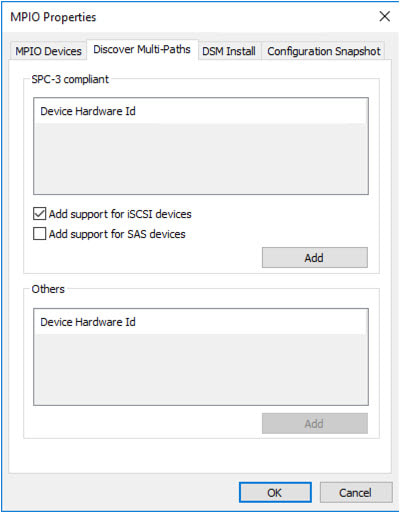

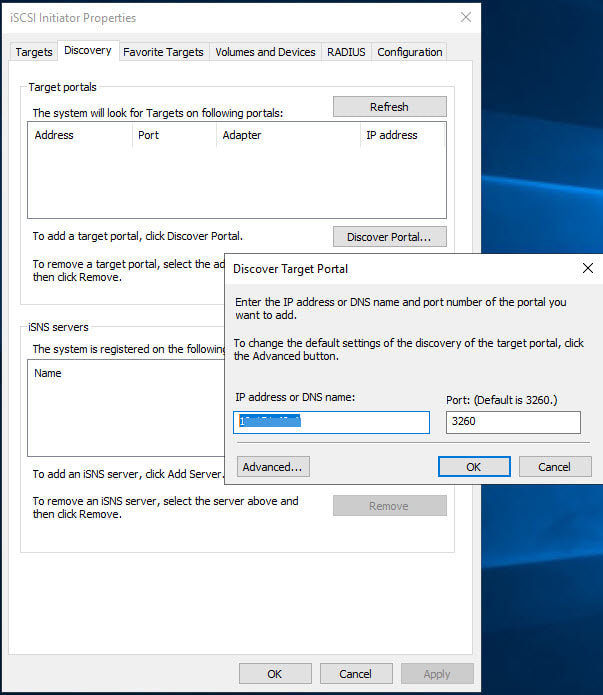

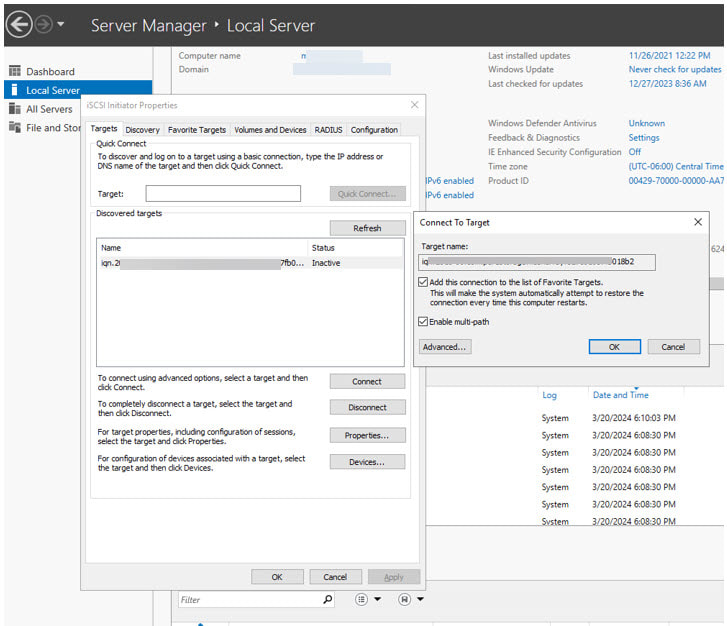

Sometimes we come across unique, specialized use cases where solutions must be found outside of the box. This particular use case for me involved industry physical security video recording for hundreds of cameras across many different geographic locations -- with a caveat -- the vendor software can ONLY write to a locally presented disk (no NAS locations, no cloud possibility). Conditions were also right in the storage sphere where a new large-capacity all flash array came to market with incredible pricing, so it was more attractive than using HCI storage for this solution (we needed ~2PB of storage space). We had never used iSCSI in this fashion before, so it was a journey of discovery for us. This is that journey. Prep work: 1) carve out a dedicated network for the iSCSI traffic and make sure the storage and the VM's can access it. 2) on each VM, add a vNIC to be used exclusively for iSCSI traffix 3) on each VM, modify the registry according to vendor best practices. In our case that meant modifying two: HKLM TcpAckFrequency and HKLM TcpNoDelay. Configuration & Presentation: 1) Get the IQN from the VM by opening iSCSI initiator 2) Register the VM initiator IQN with the storage array. Typically you will create a host/VM entry in the storage array, and connect the initiator IQN to it. 3) On the VM, ) install MPIO if it’s not already installed on the server you are setting up iSCSI on: https://support.purestorage.com/Solutions/Microsoft_Platform_Guide/Multipath-IO_and_Storage_Settings/Installing_Multipath-IO 3a) Configure MPIO to mask the iSCSI volumes in Windows Server per the vendor specifications. This involves inputting a string specific to the vendor into the Device Hardware ID field in the MPIO properties window 3b) You need to add iSCSI support on the MPIO Discover Multi-paths tab as well: 4) Add the iSCSI discovery portals on the VM (server). In our case, we wanted 8 paths, 4 per controller, so each controller needs to be added to the target portals: 5) Go into ISCSI on the VM and connect to the discovered target. Be sure to enable Multipath. 6) the new disk(s) should now show up in disk management on your VM and can be formatted and used.

0 Comments

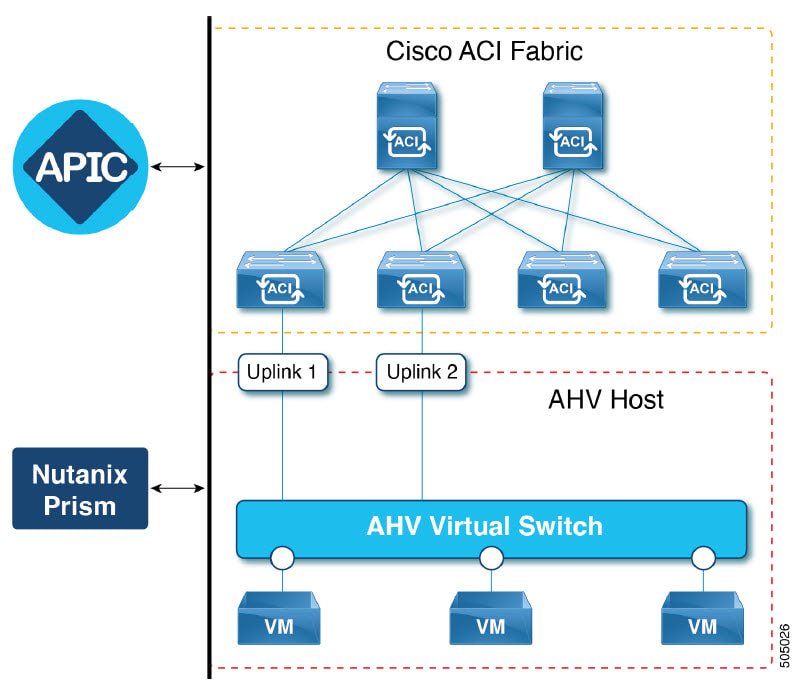

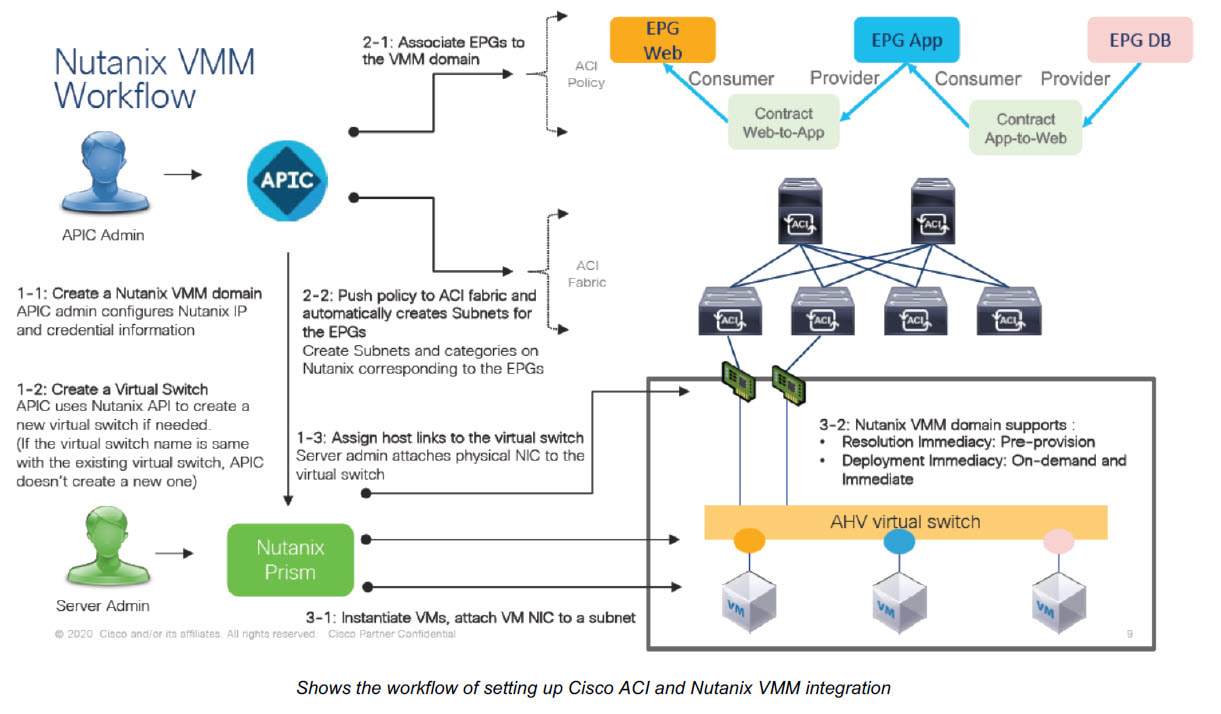

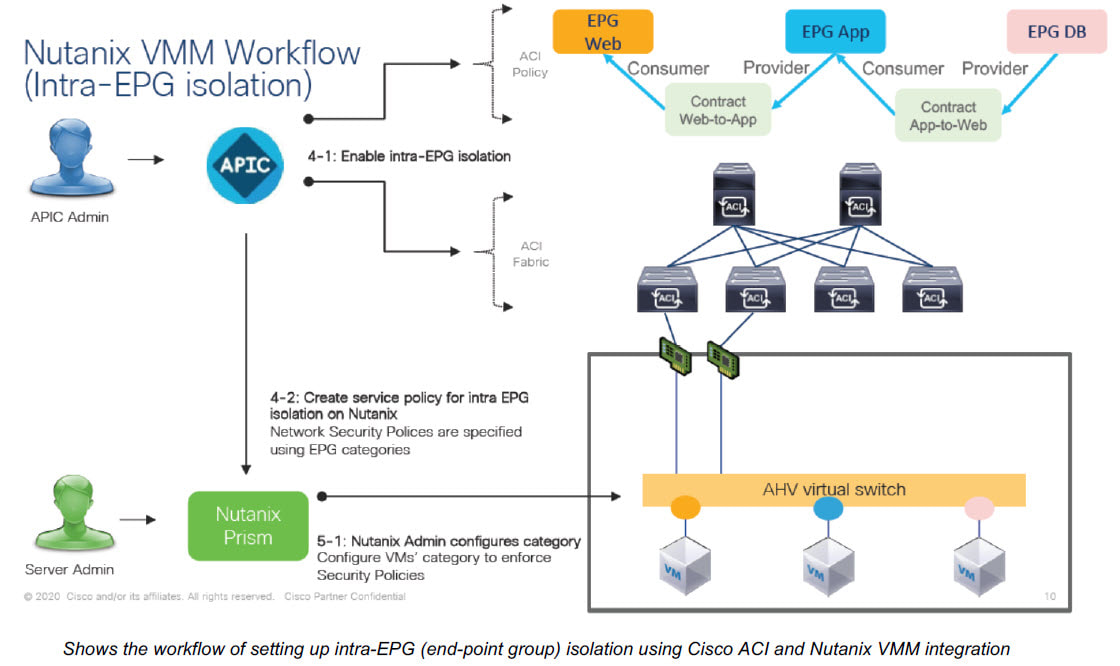

Great news for those using ACI in their environments, who might be used to ACI having visibility into their virtualized VMWare environments -- this functionality is coming to Nutanix! From Cisco's release notes: "You can now integrate the Nutanix Acropolis Hypervisor (AHV) with Cisco Application Centric Infrastructure (ACI). The Cisco APIC integrates with Nutanix AHV and enhances the network management capabilities. The integration provides virtual and physical network automation and VM endpoints visibility in Cisco ACI." https://www.cisco.com/c/en/us/td/docs/dcn/aci/apic/kb/cisco-aci-nutanix-integration.pdf You need to be running: Cisco APIC 6.0(3) -- (released August 2023) or newer Nutanix: To use current vSwitches without creating a NEW vSwitch (no vSwitch creation):

Benefits of the integration are:

The image above shows a sample topology. The Cisco APIC manages the ACI fabric and policies. The VMs connected to the virtual switch are managed by the Nutanix Prism. ACI fabric provides network connectivity for VMs in AHV Hosts with the uplinks (Uplink1 and 2). How it works: Cisco ACI uses EPGs, or end-point groups, to deliver policy to sets of defined endpoints (i.e. VMs) within ACI to NCP AHV with Flow Network Security as a VMM solution. Documentation from Cisco here:

Inclusion on Cisco’s ACI VMM solution webpage: https://www.cisco.com/c/dam/en/us/td/docs/Website/datacenter/aci/virtualization/matrix/virtmatrix.html . Cisco ACI 6.0.3 release notes mention the new Nutanix integration: https://www.cisco.com/c/en/us/td/docs/dcn/aci/apic/6x/release-notes/cisco-apic-release-notes-603.html . Cisco ACI and Nutanix AHV VMM Integration Guide/Tech Note: https://www.cisco.com/c/en/us/td/docs/dcn/aci/apic/kb/cisco-aci-nutanixintegration. html?dtid=osscdc000283 Big announcements aplenty at the .NEXT 2023 conference. In no particular order: 1. Nutanix Central: A SaaS based cloud-delivered solution that will provide a single interface for managing ALL of your Prism Central deployments, whether on-prem, cloud or MSP. In early-access now(ish) with features set to roll out going forward. https://www.nutanix.com/blog/introducing-nutanix-central 2. Multicloud Snapshot Technology (MST) - This allows snapshots directly to cloud-native datastores in S3.

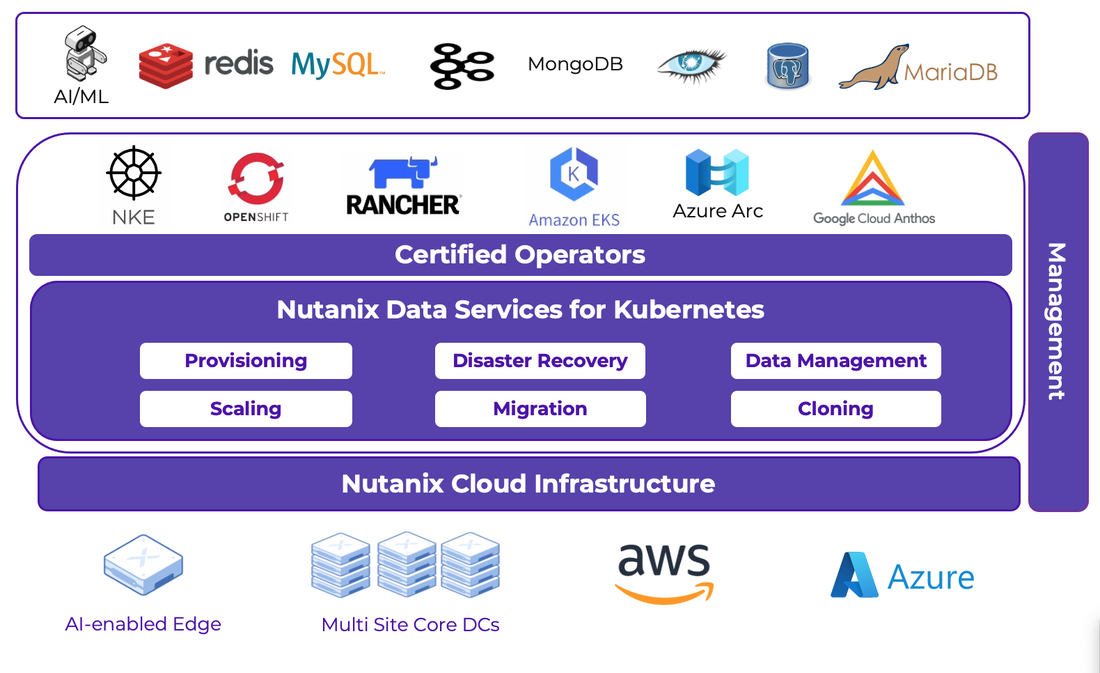

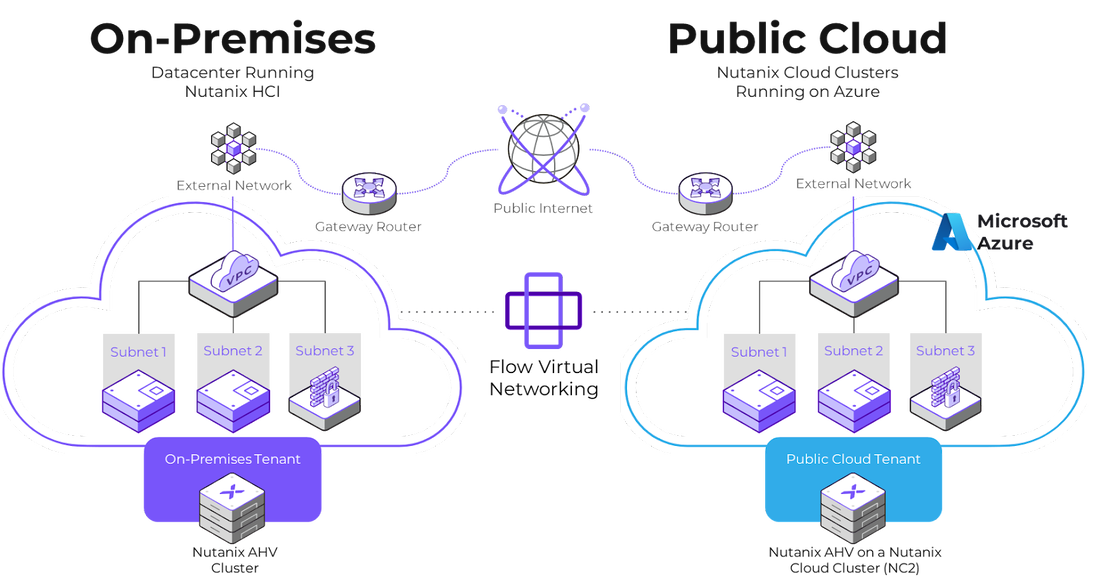

3. Project Beacon - This initiative takes aim to provide a portfolio of data-centric PaaS services available natively in the cloud. It's intent is to decouple applications and their data from the underlying infrastructure, giving developers greater flexibility to build applications once and run them across various environments natively on Nutanix or in the public cloud. https://www.nutanix.com/blog/introducing-project-beacon 4. Nutanix Data Services for Kubernetes (NDK) - in early access. NDK is designed to deliver enterprise-class storage, snapshots and DR capability to Kubernetes. https://www.nutanix.com/blog/nutanix-announces-early-access-of-ndk 5. NCP enahancements which include the ability to dynamically apply security policies across multiclouds enabling secure, seamless virtual networks using Flow Virtual Networking. Available now in NC2/Azure, and in development for AWS. https://www.nutanix.com/blog/dynamic-approach-to-hybrid-multicloud-network-securityNCP It was also a chance to meet up with old friends and make some new ones. NUG Chapter Champions in attendance met in the Solutions Expo for some hangout time and a group photo: Shout out to all the Nutanix Technology Champions in the Day 2 General Session: Great conference, nice venue. It was nice having the conference so close to home base. Hope to see everybody in 2024!

History: We had been running Nutanix successfully for years on the ESXi platform. We initially landed on ESXi because that's what we were using when we decided to move to HCI-first. In an effort to streamline operations, lifecycle management, we took a serious look at AHV as an option -- after all, it was included with the Nutanix licenses we were already paying for. We added an AHV cluster to our test lab and put it through the paces for a few months and then we decided it was ready for primetime, so we kicked off this project. Our goals:

Challenges to overcome:

Phase: Planning

Phase: Buildout

Phase: Execution & Migration

Here are the steps to move virtual machines from ESXi to Nutanix AHV using Nutanix Move:

Phase: Aftermath

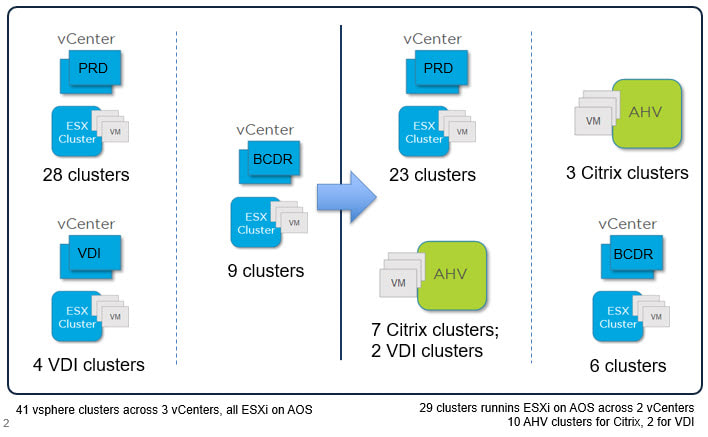

The goal: move an entire production Citrix & VDI platform from ESXi to AHV. Below is our before (left) and after (right) What is delivered: •EMR sessions daily: 10000+ •VDI sessions daily: 4800+ •delivers 900+ general apps •13000+ Citrix users What makes it up: •Published Apps (1155 servers) •Infrastructure (69 Server) •primary data center hosts- 110 •secondary data center hosts- 72 The POC

Cluster sizing

Workload & support challenges

The Citrix migration

The VDI migration

The migration was fairly painless and we actually saw improved density on the clusters running AHV instead of ESXi on top of AOS -- about a 10% improvement. The transition for our Citrix support staff was seamless.

The success of this project paved the way for our next project; converting our general production workloads from ESXi to AHV. |

Chad DorrEngineering cloud, infrastructure, storage & virtualization solutions for 16+ years. Archives

August 2023

Categories

All

|

RSS Feed

RSS Feed